使用DeepBrain AI的在线Deepfake探测器保护您的数字空间,该探测器旨在在几分钟内快速准确地识别人工智能生成的内容。

轻松识别难以用肉眼检测的高级深度伪造视频。

DeepBrain AI的deepfake识别工具由先进的深度学习算法提供支持,可检查视频内容的各种元素,以有效区分和检测不同类型的合成媒体操作。

上传您的视频,我们的人工智能将对其进行快速分析,在五分钟内提供准确的评估,以确定它是使用deepfake还是AI技术创建的。

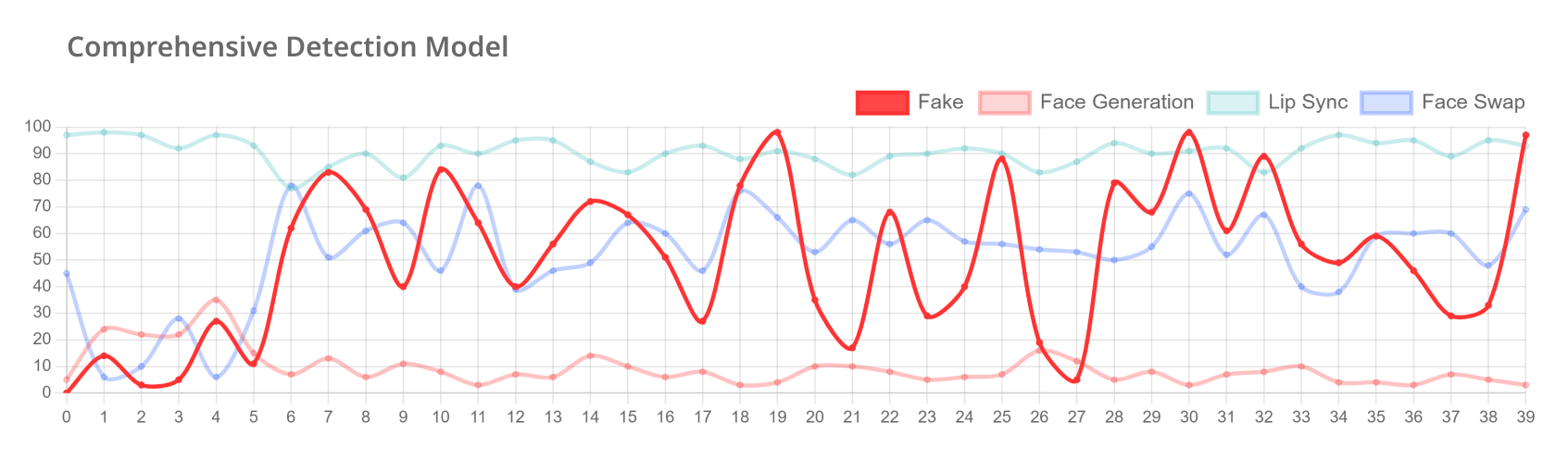

我们可以准确检测各种深度伪造表单,例如人脸交换、口型同步操作和人工智能生成的视频,确保您获得真实可信的内容。

快速准确地检测被操纵的视频和媒体,以防范各种深度伪造犯罪。DeepBrain AI 的检测解决方案有助于防止欺诈、身份盗用、个人剥削和错误信息活动。

我们不断改进我们的技术,以打击深度伪造,保护弱势群体并提供可行的见解,以防范数字剥削。我们致力于赋予组织有效保护数字完整性的能力。

我们提供解决方案,并与包括韩国国家警察局在内的执法部门合作,以改进我们的deepfake检测软件,以更快地应对相关犯罪。

DeepBrain AI被韩国科学和信息通信技术部选中与首尔国立大学的人工智能研究实验室(DASIL)合作领导 “Deepfake操作视频人工智能数据” 项目。

我们为企业、政府机构和教育机构提供一个月的免费演示,以打击人工智能生成的视频犯罪并增强他们的应对能力。

Check out our FAQ for quick answers on our deepfake detection solution.

A deepfake is synthetic media created using artificial intelligence and machine learning techniques. It typically involves manipulating or generating visual and audio content to make it appear as if a person has said or done something that they haven't in reality. Deepfakes can range from face swaps in videos to entirely AI-generated images or voices that mimic real people with a high degree of realism.

DeepBrain AI's deepfake detection solution is designed to identify and filter out AI-generated fake content. It can spot various types of deepfakes, including face swaps, lip syncs, and AI/computer-generated videos. The system works by comparing suspicious content with original data to verify authenticity. This technology helps prevent potential harm from deepfakes and supports criminal investigations. By quickly flagging artificial content, DeepBrain AI's solution aims to protect individuals and organizations from deepfake-related threats.

Each deepfake detection system uses different techniques to spot manipulated content. DeepBrain AI’s deepfake detection process leverages a multi-step method to verify authenticity:

This multi-step approach allows DeepBrain AI to thoroughly analyze videos, images, and audio to determine if they are genuine or artificially created.

The accuracy of DeepBrain AI’s deepfake detection technology varies as the technology develops, but it generally detects deepfakes with over 90% accuracy. As the company continues to advance its technology, this accuracy keeps improving.

DeepBrain AI's current deepfake solution focuses on rapid detection rather than preemptive blocking. The system quickly analyzes videos, images, and audio, typically delivering results within 5–10 minutes. It categorizes content as "real" or "fake" and provides data on alteration rates and synthesis types.

Aimed at mitigating harm, the solution does not automatically remove or block content but notifies relevant parties like content moderators or individuals concerned about deepfake impersonation. The responsibility for action rests with these parties, not DeepBrain AI.

DeepBrain AI is actively working with other organizations and companies to make preemptive blocking a possibility. For now, its detection solutions help review suspicious content and assist in investigating fake deepfake videos to reduce further harm.

Major tech companies are actively responding to the deepfake issue through collaborative initiatives aimed at mitigating the risks associated with deceptive AI content. Recently, they signed the "Tech Accord to Combat Deceptive Use of AI in 2024 Elections" at the Munich Security Conference. This agreement commits firms like Microsoft, Google, and Meta to develop technologies that detect and counter misleading content, particularly in the context of elections. They are also developing advanced digital watermarking techniques for authenticating AI-generated content and partnering with governments and academic institutions to promote ethical AI practices. Additionally, companies continuously update their detection algorithms and raise public awareness about deepfake risks through educational campaigns, demonstrating a strong commitment to addressing this emerging challenge.

While major tech companies are making strides to combat deepfakes, their efforts may not be enough. The vast amount of content on social media makes it nearly impossible to catch every instance of manipulated media, and more sophisticated deepfakes can evade detection for longer periods.

For individuals and organizations seeking additional protection, specialized solutions like DeepBrain AI offer a valuable layer of security. By continuously analyzing internet media and tracking specific individuals, DeepBrain AI helps mitigate the risks associated with deepfakes. In summary, while industry initiatives are important, a multi-faceted approach that includes specialized tools and public awareness is essential for effectively tackling the deepfake challenge.